Understanding Principal Component Analysis

Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a statistical technique used to reduce the dimensionality of complex datasets while preserving as much variability as possible. It transforms the original correlated variables into a new set of uncorrelated variables called principal components. These components are ordered so that the first few capture most of the variation in the data, making it easier to visualize patterns, remove noise, and improve the performance of downstream models.

PCA is widely used in data science, machine learning, image processing, and exploratory data analysis. By projecting data onto a lower-dimensional space, it helps reveal hidden structure, highlight relationships between features, and simplify models without losing essential information.

The PCA process typically involves standardizing the data, computing the covariance matrix, and then finding its eigenvalues and eigenvectors. The eigenvectors define the directions of maximum variance (principal components), while the eigenvalues indicate how much variance each component explains. Analysts then select the top components based on explained variance, often visualized with a scree plot.

PCA assumes linear relationships and is sensitive to scaling, so proper preprocessing is crucial. Despite its simplicity, it remains one of the most powerful and interpretable tools for dimensionality reduction, feature extraction, and data visualization in high-dimensional spaces.

Principal Component Analysis (PCA) Explained for Laymen

A Simple, Intuitive Guide with Examples

Introduction

In today's data-driven world, we often deal with too much information at once. Whether it's student marks, medical test results, or business data, handling many variables together can become confusing and inefficient.

This is where Principal Component Analysis (PCA) comes in.

PCA is a simple yet powerful technique that helps us reduce complexity without losing important information. In this blog, we'll explain PCA in plain language — no maths, no heavy machine learning jargon.

The Core Problem PCA Solves

Imagine trying to understand something using too many measurements:

-

A student evaluated using 10 subjects

-

A patient tested using 40 medical parameters

-

A business analyzed using 100 performance indicators

More data does not always mean more clarity. Often, many measurements are closely related and repeat the same information.

PCA helps by asking:

"Can we describe this data using fewer, smarter variables?"

What is PCA in Simple Words?

Principal Component Analysis (PCA) is a method that:

-

Combines related information

-

Finds the most important patterns in data

-

Replaces many variables with a few meaningful summaries

Think of PCA as data compression with intelligence.

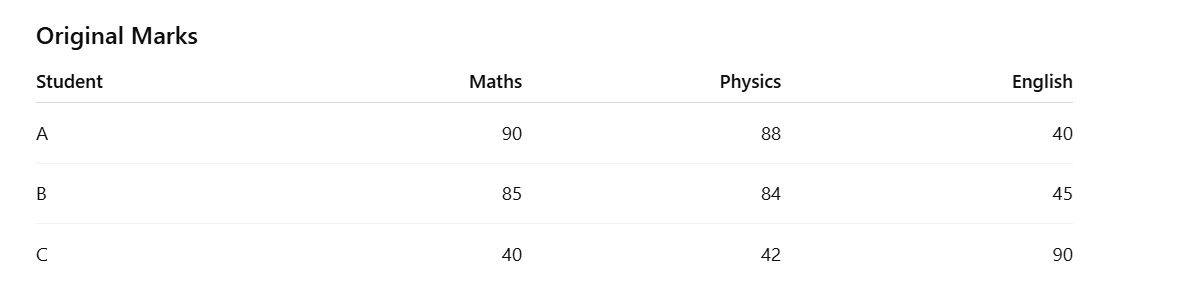

A Student Marks Example

Imagine three subjects:

-

Maths

-

Physics

-

English

Observations:

-

Maths and Physics move together

-

English behaves differently

PCA notices this pattern automatically.

What PCA Does Next

Instead of keeping all subjects separately, PCA creates new combined scores:

-

PC1: Science Strength (mostly Maths + Physics)

-

PC2: Language Strength (English compared to Science)

Now:

-

3 subjects → 2 meaningful numbers

-

Almost all important information is preserved

-

Comparison becomes easier

Why PCA is Called "Unsupervised"

PCA does not know:

-

Who passed or failed

-

Who is a topper

-

Any class labels or outcomes

It only looks at relationships between numbers.

That's why PCA is classified as an unsupervised machine learning technique.

Real-Life Uses of PCA

PCA is widely used across industries:

📸 Image Processing

-

Compress images

-

Remove noise

-

Speed up recognition systems

🏥 Healthcare

-

Combine dozens of test results

-

Identify overall health indicators

🛒 Business & Marketing

-

Understand customer behavior

-

Reduce hundreds of features into key traits

🤖 Machine Learning

-

Reduce data size before training models

-

Improve speed and performance

What PCA Does NOT Do

It's important to understand PCA's limits:

-

❌ It does not predict outcomes

-

❌ It does not classify data

-

❌ It does not understand meaning — only patterns

PCA is about structure discovery, not decision-making.

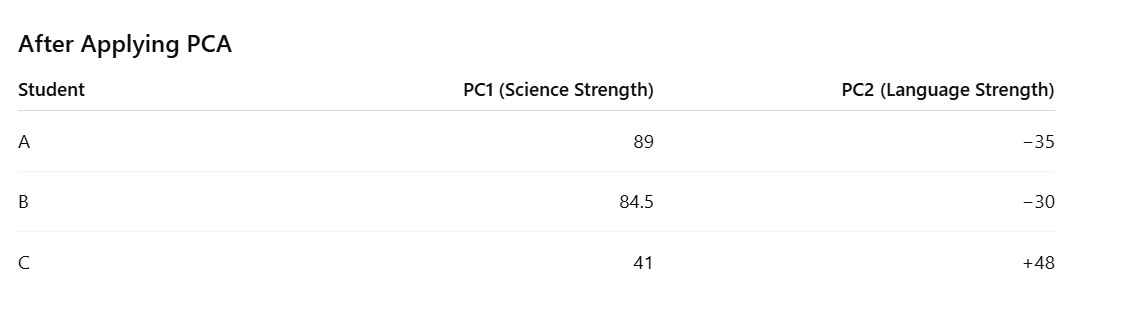

🩺📄 The "Doctor's Test Report" Cartoon

Panel 1: Too many reports

A doctor is sitting at a desk covered with lab reports:

-

Blood sugar

-

HbA1c

-

Cholesterol

-

Triglycerides

-

BP

-

ECG

-

BMI

-

Heart rate

The doctor looks overwhelmed 😵 and says:

"So many numbers… what's the big picture of this patient?"

👉 This is high-dimensional data.

Panel 2: PCA enters the clinic

A calm assistant named PCA walks in 🧑⚕️✨ and says:

"Don't worry. I'll summarize these tests."

Important:

-

PCA does not know if the patient is sick or healthy

-

PCA does not diagnose

-

PCA only looks at how test values move together

Panel 3: PCA finds patterns

PCA notices:

-

Blood sugar & HbA1c rise together

-

Cholesterol & Triglycerides rise together

-

BP, ECG & Heart rate move together

So PCA draws three big arrows on the report:

➡️ Arrow 1: Sugar Control

⬆️ Arrow 2: Lipid Health

↗️ Arrow 3: Cardiac Stress

These arrows are the principal components.

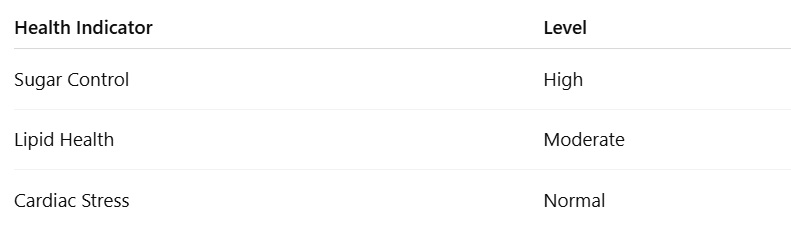

Panel 4: Simplified health summary

Instead of 8 test values, the report now shows:

The doctor smiles 😄:

"Now I understand the patient instantly!"

Almost all important information is still there — just cleaner and clearer.

🧠 What PCA did (in simple terms)

-

Combined related medical tests

-

Reduced complexity

-

Found hidden structure

-

Made no medical decisions

⚠️ Very important note

PCA does NOT replace a doctor.

It:

-

❌ does not diagnose

-

❌ does not predict disease

-

✅ only summarizes data patterns

One-line takeaway 🎯

PCA turns many medical test numbers into a few overall health indicators without knowing the diagnosis.

MCQs: Principal Component Analysis (PCA)

1. What is the main purpose of Principal Component Analysis (PCA)?

A. To predict future values

B. To classify data into groups

C. To reduce the number of variables while keeping important information

D. To increase data size

✅ Correct Answer: C

Explanation: PCA simplifies data by reducing dimensions without losing much information.

2. PCA is considered an unsupervised technique because it:

A. Uses class labels

B. Needs training and testing data

C. Works only with images

D. Does not use output labels

✅ Correct Answer: D

Explanation: PCA looks only at feature relationships and ignores labels.

3. In PCA, what does the first principal component (PC1) represent?

A. The smallest variation in data

B. The direction of maximum variance

C. Random direction

D. The class boundary

✅ Correct Answer: B

Explanation: PC1 captures the maximum spread (variance) in the data.

4. In the student-marks example, Maths and Physics were combined because:

A. They had low marks

B. They were compulsory subjects

C. They showed similar patterns

D. The teacher chose them manually

✅ Correct Answer: C

Explanation: PCA combines variables that move together.

5. Which of the following best describes a "principal component"?

A. An original feature

B. A class label

C. A new combined variable

D. A prediction

✅ Correct Answer: C

Explanation: Principal components are new variables created from existing ones.

6. PCA works primarily on which type of information?

A. Labels and categories

B. Relationships between variables

C. Text meaning

D. Logical rules

✅ Correct Answer: B

Explanation: PCA focuses on how variables vary together.

7. What happens to less important variations in PCA?

A. They are highlighted

B. They are amplified

C. They are ignored or removed

D. They are labeled

✅ Correct Answer: C

Explanation: PCA keeps major variance and removes minor noise.

8. Which of the following is NOT a real-world application of PCA?

A. Image compression

B. Noise reduction

C. Feature reduction before ML models

D. Email spam prediction by itself

✅ Correct Answer: D

Explanation: PCA alone does not predict or classify.

9. PCA creates new axes that are:

A. Random

B. Parallel to original axes

C. Always horizontal and vertical

D. Orthogonal (at right angles) to each other

✅ Correct Answer: D

Explanation: Principal components are always perpendicular.

10. In the student example, PC1 mainly represented:

A. English marks

B. History knowledge

C. Science strength

D. Attendance

✅ Correct Answer: C

Explanation: PC1 captured the combined variation of Maths and Physics.

11. PCA reduces dimensionality by:

A. Deleting rows

B. Deleting students

C. Combining correlated features

D. Guessing missing values

✅ Correct Answer: C

Explanation: Correlated features are merged into principal components.

12. PCA is most useful when:

A. All features are unrelated

B. Data has many correlated variables

C. Labels are available

D. Data is very small

✅ Correct Answer: B

Explanation: PCA shines when features are highly correlated.

13. Which statement about PCA is TRUE?

A. PCA improves model accuracy in all cases

B. PCA always keeps all information

C. PCA may slightly lose information

D. PCA requires class labels

✅ Correct Answer: C

Explanation: PCA trades a small information loss for simplicity.

14. What does variance mean in simple terms?

A. Number of rows

B. Spread of data values

C. Average value

D. Class difference

✅ Correct Answer: B

Explanation: Variance measures how spread out the data is.

15. PCA is best described as:

A. A classification algorithm

B. A prediction model

C. A dimensionality reduction technique

D. A clustering method

✅ Correct Answer: C

Explanation: PCA reduces dimensions while preserving structure.