Mastering Cross Validation

Cross-validation is one of the most important concepts in machine learning and data science, yet it is often misunderstood. Whether you are a student, a data analyst, 'or a machine learning practitioner, understanding cross-validation helps you build models that truly generalize to real-world data.

In this blog post, we'll explain cross-validation in simple language, with examples, types, advantages, and best practices.

What is Cross Validation?

Cross-validation is a model evaluation technique used to assess how well a machine learning model performs on unseen data.

Instead of training and testing a model only once, cross-validation:

Trains the model multiple times

Uses different subsets of data each time

Produces a more reliable performance estimate

In simple terms, cross-validation checks whether your model is learning patterns or just memorizing data.

Why is Cross Validation Important?

Without cross-validation, models may suffer from:

Overfitting – performing well on training data but poorly on new data

Unreliable accuracy estimates

Poor real-world performance

Cross-validation helps to:

Measure true generalization performance

Compare multiple models fairly

Tune hyperparameters effectively

Simple Real-Life Analogy

Imagine evaluating a student's knowledge:

Asking only one question gives an unreliable result

Asking multiple questions and averaging the score is fair

Cross-validation works the same way for machine learning models.

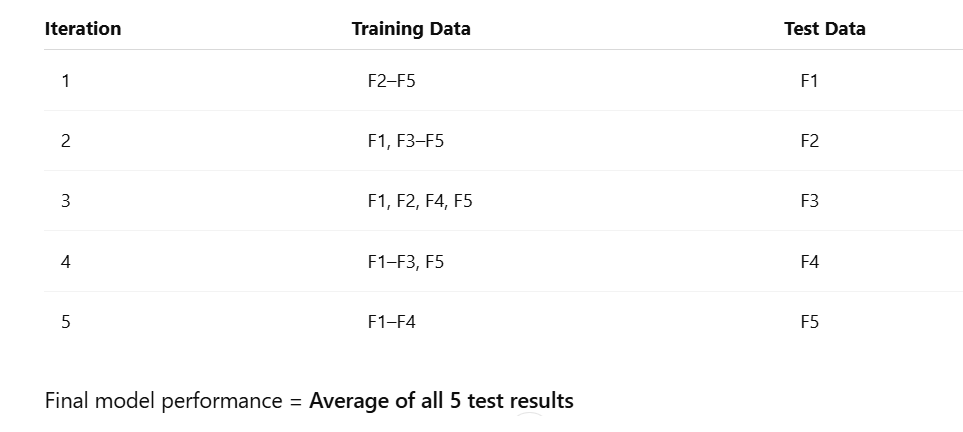

K-Fold Cross Validation (Most Common Method)

How K-Fold Cross Validation Works

Split the dataset into K equal parts (folds)

Use K−1 folds for training and 1 fold for testing

Repeat the process K times, changing the test fold each time

Compute the average performance score

Example: 5-Fold Cross Validation

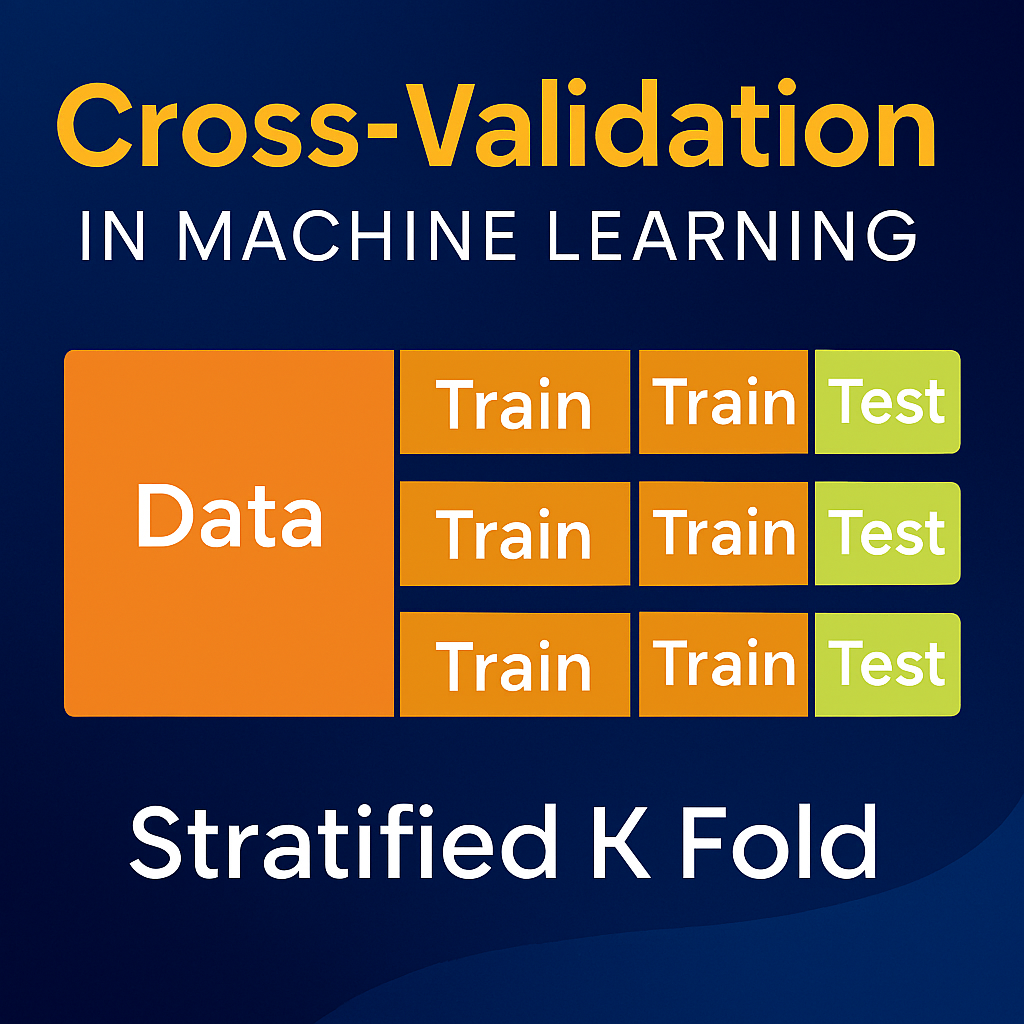

Stratified K-Fold Cross Validation

Stratified K-Fold is a variation of K-Fold used for classification problems, especially when the dataset is imbalanced.

Key Feature

Each fold maintains the same class distribution as the original dataset

Why It Matters

If one class dominates the dataset, normal K-Fold may produce test sets with very few or no minority samples, leading to misleading accuracy.

Stratified K-Fold solves this problem.

1️⃣ Why Do We Need Stratification?

Problem with Normal K-Fold

If classes are imbalanced, random splitting may produce:

-

A fold with very few or zero minority samples

-

Misleading accuracy

Example (Imbalanced Dataset)

Original dataset:

-

Class 1 → 90 samples

-

Class 0 → 10 samples

❌ Normal K-Fold might create a fold with 0 Class-0 samples

✔ Stratified K-Fold prevents this

2️⃣ Core Idea (One-Line Definition)

Stratified K-Fold ensures each fold has approximately the same class distribution as the full dataset.

Class Spam Count 80

Not Spam Count 20

5-Fold Stratified CV

Each fold contains:

-

16 Spam

-

4 Not Spam

Every fold is a mini-replica of the original dataset.

5️⃣ Algorithm Workflow

-

Separate data by class

-

Divide each class into K equal parts

-

Combine one part from each class to form a fold

-

Repeat K times

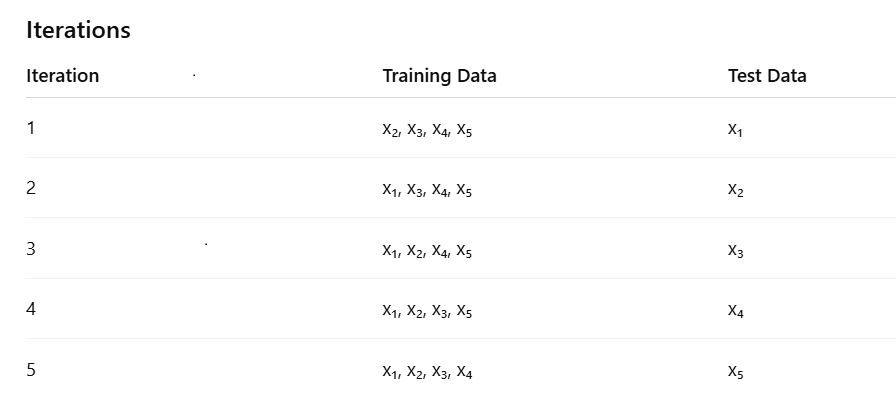

Leave-One-Out Cross Validation (LOOCV)

LOOCV is an extreme case of K-Fold where:

K = number of data points

Each observation is used once as the test set

Pros

Very low bias

Uses almost the entire dataset for training

Cons

Computationally expensive

High variance

Not suitable for large datasets

1️⃣ Core Idea (In One Line)

Train on (n − 1) samples and test on the remaining 1 sample — repeat for all samples.

2️⃣ Step-by-Step Explanation

Assume you have 5 data points:

D={x1,x2,x3,x4,x5}

Holdout Validation

Holdout Validation is one of the simplest and earliest model evaluation techniques in machine learning. It is closely related to the Train–Test Split method and is often used as a quick baseline approach.

What is Holdout Validation?

In Holdout Validation, the dataset is divided into two disjoint parts:

Training set (Holdout-in) → Used to train the model

Testing set (Holdout-out) → Used only once to evaluate the model

The model is trained on the training set and evaluated on the holdout (test) set.

The key idea is that the test data is held out and never seen during training.

Typical Data Split Ratios

Training Data Testing Data

70: 30

80:20 (most common)

90 :10

Step-by-Step Workflow

Randomly shuffle the dataset

Split data into training and testing sets

Train the model using the training data

Evaluate performance on the holdout test data

Simple Example

Suppose you have 1000 samples:

Training set → 800 samples

Test set → 200 samples

The model learns from 800 samples and its accuracy is measured on the remaining 200 unseen samples.

Advantages of Holdout Validation

Very simple and fast to implement

Computationally inexpensive

Suitable for large datasets

Useful for quick baseline evaluation

Limitations of Holdout Validation

Performance depends on one random split

High variance in results

Not reliable for small datasets

Poor choice for imbalanced datasets unless stratified

MCQs on Cross Validations

1. What is the primary goal of cross-validation in machine learning?

A. To increase training accuracy

B. To reduce dataset size

C. To evaluate model performance on unseen data

D. To speed up model training

Correct Answer: C

2. In K-Fold Cross-Validation, what does K represent?

A. Number of features

B. Number of classes

C. Number of data points

D. Number of subsets the data is split into

Correct Answer: D

3. Which value of K is most commonly used in practice?

A. K = 2

B. K = 5 or 10

C. K = number of samples

D. K = number of features

Correct Answer: B

4. Stratified K-Fold Cross-Validation is mainly used for:

A. Regression problems

B. Time-series data

C. Classification problems with imbalanced classes

D. Feature selection

Correct Answer: C

5. What is the key advantage of Stratified K-Fold over normal K-Fold?

A. Faster execution

B. Lower bias

C. Maintains class distribution in each fold

D. Uses more training data

Correct Answer: C

6. In Leave-One-Out Cross-Validation (LOOCV), how many samples are used for testing in each iteration?

A. K samples

B. n−1 samples

C. 1 sample

D. 50% of the dataset

Correct Answer: C

7. Which of the following is a major disadvantage of LOOCV?

A. High bias

B. Low accuracy

C. High computational cost

D. Poor use of data

Correct Answer: C

8. Holdout Validation differs from cross-validation because it:

A. Uses multiple test sets

B. Uses only one train–test split

C. Always gives higher accuracy

D. Cannot be used for classification

Correct Answer: B

9. Which validation technique has the highest variance in performance estimates?

A. K-Fold Cross-Validation

B. Stratified K-Fold

C. Holdout Validation

D. LOOCV

Correct Answer: C

10. Which validation method is most suitable for very large datasets?

A. LOOCV

B. Stratified K-Fold

C. K-Fold with large K

D. Holdout Validation

Correct Answer: D

12. Which of the following provides the most reliable estimate of model performance for small datasets?

A. Holdout Validation

B. Train–Test Split

C. K-Fold Cross-Validation

D. Random guessing

Correct Answer: C

14. Which cross-validation method uses almost the entire dataset for training in each iteration?

A. Holdout Validation

B. K-Fold

C. Stratified K-Fold

D. Leave-One-Out Cross-Validation

Correct Answer: D

15. Which statement is TRUE about cross-validation?

A. It eliminates overfitting completely

B. It guarantees higher accuracy

C. It provides a more stable estimate of model performance

D. It replaces the need for a test set entirely

Correct Answer: C