Association Rules in Unsupervised Learning

Association Rules in Unsupervised Machine Learning

Association rule learning is an unsupervised machine learning technique used to discover interesting relationships, patterns, and co-occurrences within large datasets. Instead of predicting a specific target label, it explores how items or events tend to appear together, making it especially popular in market basket analysis, recommendation systems, and customer behavior insights. Key concepts include support (how frequently items appear together), confidence (how often a rule is true), and lift (how much stronger the rule is than random chance). Algorithms like Apriori and FP-Growth efficiently search through vast combinations of items to find rules that are both statistically significant and practically useful.

In practice, association rules help organizations understand which products are frequently bought together, which features users tend to use in combination, or which events commonly follow one another. This insight can drive smarter product bundling, cross-selling strategies, and personalized recommendations. Because it is unsupervised, the method does not require labeled data; instead, it relies on exploring raw transactional or event logs. Careful tuning of minimum support and confidence thresholds is essential to filter out noise and focus on the most meaningful patterns. When applied thoughtfully, association rule learning transforms complex, high-volume data into clear, actionable knowledge for decision-makers.

Association Rule Mining: A Complete Guide with Examples & MCQs

Association Rule Mining is one of the most powerful techniques in data mining and business analytics. It helps organizations discover hidden patterns, relationships, and correlations in large datasets — especially in transactional data.

If you've ever seen:

-

"Customers who bought X also bought Y"

-

Product bundling suggestions on Amazon

-

Supermarket combo offers

You've witnessed Association Rule Mining in action.

1. What is Association Rule Mining?

Association Rule Mining is a data mining technique used to discover interesting relationships (associations) between variables in large datasets.

It answers questions like:

-

Which products are frequently purchased together?

-

What services are commonly used together?

-

What patterns exist in customer behavior?

It is widely used in:

-

Retail analytics

-

E-commerce recommendation systems

-

Healthcare data analysis

-

Fraud detection

-

Cybersecurity event correlation

2. Key Terminology in Association Rules

2.1 Itemset

A collection of one or more items.

Example:

-

{Milk, Bread}

-

{Laptop, Mouse}

2.2 Support

Support measures how frequently an itemset appears in the dataset.

Support(A)=Number of transactions containing A/Total number of transactions

Example:

If Milk appears in 4 out of 10 transactions:

Support(Milk) = 4/10 = 0.4

2.3 Confidence

Confidence measures how often items in Y appear in transactions that contain X.

Confidence(X→Y)=Support(X∪Y)/Support(X)

It represents the conditional probability:

P(Y | X)

2.4 Lift

Lift measures how much more likely Y is purchased when X is purchased.

Lift(X→Y)=Confidence(X→Y)/Support(Y)

-

Lift > 1 → Positive association

-

Lift = 1 → Independent

-

Lift < 1 → Negative association

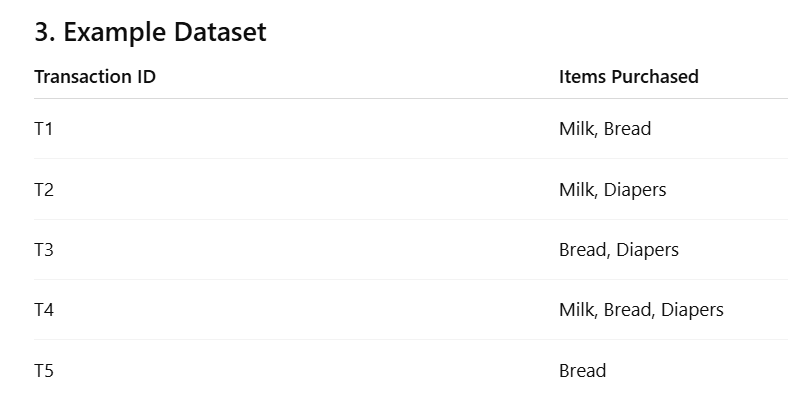

Let's calculate:

-

Support(Milk) = 3/5 = 0.6

-

Support(Bread) = 4/5 = 0.8

-

Support(Milk, Bread) = 2/5 = 0.4

Confidence(Milk → Bread) = 0.4 / 0.6 = 0.67

Lift = 0.67 / 0.8 = 0.84

Since Lift < 1, Milk and Bread are weakly negatively associated in this dataset.

4. Apriori Algorithm

The most famous algorithm for association rule mining is:

Apriori Algorithm

Principle:

If an itemset is frequent, all its subsets must also be frequent.

Steps:

-

Generate frequent 1-itemsets

-

Generate candidate 2-itemsets

-

Prune using minimum support

-

Repeat until no more frequent itemsets

-

Generate rules using confidence threshold

5. Applications of Association Rule Mining

1. Market Basket Analysis

Identify products frequently purchased together.

2. Recommendation Systems

Amazon-style product recommendations.

3. Healthcare

Discover disease-drug relationships.

4. Cybersecurity

Detect unusual event combinations (useful in SIEM analysis).

5. Fraud Detection

Find suspicious transaction patterns.

6. Advantages

-

Easy to interpret

-

Unsupervised learning method

-

Highly useful in retail analytics

-

Scalable with algorithms like FP-Growth

7. Limitations

-

Can generate too many rules

-

High computational cost

-

Requires careful threshold tuning

-

May produce misleading rules without lift

MCQs on Association Rule Mining (With Answers & Explanations)

Q1. Association rule mining is primarily used to:

A. Classify data

B. Predict continuous values

C. Discover relationships among variables

D. Reduce dimensionality

Answer: C

Explanation: It identifies relationships and co-occurrence patterns in large datasets.

Q2. Support measures:

A. Accuracy of prediction

B. Frequency of occurrence

C. Conditional probability

D. Variance

Answer: B

Explanation: Support indicates how frequently an itemset appears in the dataset.

Q3. Confidence represents:

A. P(X)

B. P(Y)

C. P(Y|X)

D. P(X|Y)

Answer: C

Explanation: Confidence is conditional probability of Y given X.

Q4. If Lift = 1, then:

A. Positive association

B. Negative association

C. Independent

D. Perfect correlation

Answer: C

Explanation: Lift = 1 means X and Y are independent.

Q5. Apriori algorithm is based on:

A. Greedy search

B. Divide and conquer

C. Downward closure property

D. Gradient descent

Answer: C

Explanation: If a set is frequent, all its subsets must also be frequent.

Q6. Which is NOT a measure in association rules?

A. Support

B. Confidence

C. Lift

D. Entropy

Answer: D

Explanation: Entropy is used in decision trees.

Q7. Minimum support is used to:

A. Remove rare itemsets

B. Increase rule complexity

C. Increase accuracy

D. Reduce lift

Answer: A

Explanation: It prunes infrequent itemsets.

Q8. Market Basket Analysis is an example of:

A. Clustering

B. Association rule mining

C. Regression

D. Classification

Answer: B

Explanation: It finds co-purchased items.

Q9. If Confidence(X→Y) = 1:

A. X always implies Y

B. Y always implies X

C. X and Y are independent

D. Support is zero

Answer: A

Explanation: Whenever X occurs, Y also occurs.

Q10. FP-Growth is:

A. Faster alternative to Apriori

B. A regression model

C. A classification model

D. A clustering method

Answer: A

Explanation: FP-Growth avoids candidate generation and is more efficient.

📗 Numerical-Based MCQs (Higher Level)

Q11. If Support(X) = 0.5 and Support(X,Y) = 0.3, then Confidence(X→Y) is:

A. 0.5

B. 0.6

C. 0.3

D. 0.8

Answer: B

Explanation: 0.3 / 0.5 = 0.6

Q12. If Confidence(X→Y)=0.8 and Support(Y)=0.4, Lift is:

A. 2

B. 1

C. 0.5

D. 3

Answer: A

Explanation: 0.8 / 0.4 = 2 (strong positive association)

Q13. If Lift < 1, it indicates:

A. Positive relation

B. Negative relation

C. Perfect dependency

D. High confidence

Answer: B

Explanation: Less than 1 means negative association.

Q14. The first step in Apriori is:

A. Generate rules

B. Prune rules

C. Find frequent 1-itemsets

D. Calculate lift

Answer: C

Explanation: Apriori starts with single item frequency.

Q15. Association rule mining is:

A. Supervised learning

B. Reinforcement learning

C. Unsupervised learning

D. Deep learning

Answer: C

Explanation: It does not use labeled data.